The Latest from Delta Farm Press

Gregg Chase Sain

Cotton

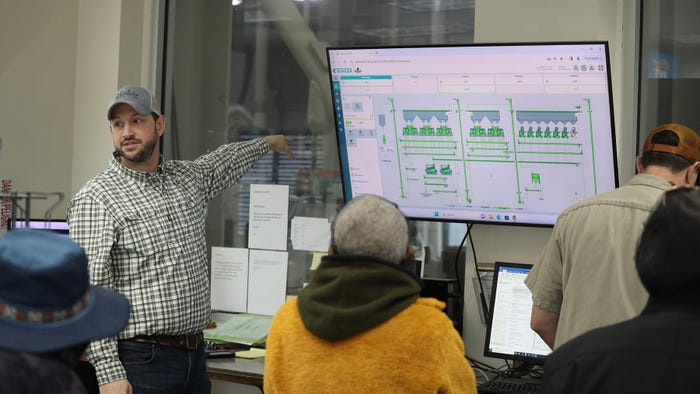

New cotton gin in the NE ArkansasNew cotton gin in the NE Arkansas

Graves Gin is a new ground-up facility with state-of-the-art systems.

Market Overview

| Contract | Last | Change | High | Low | Open | Last Trade |

|---|---|---|---|---|---|---|

| Jul 24 Corn | 443 | +6.75 | 443.5 | 436 | 436.75 | 10:24 PM |

| Jul 24 Oats | 349 | +3 | 349.75 | 341 | 346 | 10:24 PM |

| May 24 Class III Milk | 17.8 | +0.42 | 17.88 | 17.37 | 17.38 | 06:54 PM |

| Jul 24 Soybean | 1165.75 | +16.75 | 1168 | 1145.75 | 1148.25 | 10:24 PM |

| Aug 24 Feeder Cattle | 253.5 | -0.8 | 254.95 | 252.625 | 253.65 | 06:04 PM |

| May 24 Ethanol Futures | 2.161 | unch — | 2.161 | 2.161 | 2.161 | 10:24 PM |

Copyright © 2019. All market data is provided by Barchart Solutions.

Futures: at least 10 minute delayed. Information is provided ‘as is’ and solely for informational purposes, not for trading purposes or advice.

To see all exchange delays and terms of use, please see disclaimer.

All Delta Farm Press

Subscribe to receive top agriculture news

Be informed daily with these free e-newsletters